Containers are a new virtualization technology with many advantages over traditional approaches like virtual machines. A container can run any application, together with its dependencies, in a completely isolated way. The popularity of containers exploded with the release of Docker, a tool that simplifies the process of building, running and deploying them.

Some advantages of containers:

- Speed: Containers are lightweight and boot in seconds, unlike virtual machines (such as VirtualBox) that usually take a couple of minutes to boot. One can quickly download, start, and work with a container, then stop and delete it to reclaim free space when it isn’t needed anymore. Containers are a great fit for ephemeral workloads.

- Resource isolation: It is possible to specify how much CPU power, memory and disk a container may use.

- Packaging: Containers are packaged as “images” containing all of an application’s dependencies. On top of that, Docker defines an image format and a public image archive, Docker Hub. It’s possible to share an application using Docker hub and receive updates by running simple commands.

- Security: By using existing kernel technologies, containers leverage proven security concepts like namespaces, control groups, AppArmor, and SELinux.

- Infrastructure utilization: Containers can improve resource utilization of your infrastructure, as it’s possible to secure many different applications on a single Linux machine.

All these features together let developers build applications in exactly the same environment as they would run in production.

At Acquia, we use containers across our different teams for a variety of purposes. Our initial use cases were for local development environments, continuous integration testing, and repeatable isolated package builds. The recently announced Acquia Pipelines uses containers to run builds and tests Drupal websites prior to their deployment on Acquia Cloud. Our own team uses containers to deploy microservices at scale, with the help of Docker, Apache Mesos, and Apache Aurora.

Running application filesystems at scale

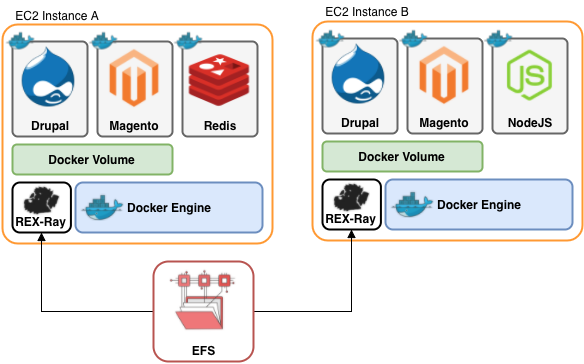

Applications like Drupal and Magento require access to a POSIX file system to store persistent data. In the case of Drupal, the filesystem is used for user uploaded files and aggregated CSS and Javascript files. For a single application instance running in a container, this is not a problem, but when the application needs to scale out, all the running containers expect to read and write from the same file system. Docker ships with support for volumes which are shared among containers, but the built-in volume plugin constrains the containers to run on a single machine – which isn’t a scalable solution.

Fortunately, Docker has pluggable volume support, and we can use a plugin to provide a different filesystem backend for our containers. We chose REX-Ray from {code} by Dell EMC. REX-Ray is a storage orchestration tool that provides a set of common commands for managing multiple storage platforms. Built on top of the libStorage framework, REX-Ray enables persistent storage for container runtimes such as Docker and Apache Mesos.

REX-Ray, libStorage, and the journey to add a new driver

REX-Ray is very flexible and can easily orchestrate Docker, libStorage, and the filesystem to produce a sharable Docker volume. Having REX-Ray handle this orchestration enabled us to introduce shared volumes without making any changes to our scheduler, Apache Aurora. Rex-Ray’s close integration with Docker, via libStorage, allows us to use our existing code to pass volume information via Docker parameters whenever an application is launched. Those parameters are automatically forwarded to REX-Ray, which does the heavy lifting to attach the necessary volume.

REX-Ray is very flexible and can easily orchestrate Docker, libStorage, and the filesystem to produce a sharable Docker volume. Having REX-Ray handle this orchestration enabled us to introduce shared volumes without making any changes to our scheduler, Apache Aurora. Rex-Ray’s close integration with Docker, via libStorage, allows us to use our existing code to pass volume information via Docker parameters whenever an application is launched. Those parameters are automatically forwarded to REX-Ray, which does the heavy lifting to attach the necessary volume.

With the recent announcement of the new Amazon Elastic File System (EFS) we decided to give it a try as a backing store for some of our applications. We dug into the code for libStorage, and after asking a couple of questions in the {code} community Slack channel we had a working Amazon EFS driver for libStorage. After some cleanup and test coverage, we were happy to contribute this driver back to the libStorage project.

Conclusion

Using REX-Ray and libStorage enabled us to quickly integrate a new filesystem backend into our existing Docker and Apache Mesos platform and gave our application containers a way to easily scale horizontally with all the benefits of Amazon EFS.

A special thanks to Clinton Kitson, Andrew Kutz, and Chris Duchense from the {code} by Dell EMC team. It was a pleasure collaborating with the {code} team as we worked through the process of writing a new libStorage driver. We look forward to continuing to be a part of this community and project as we look at extending libStorage even further with new drivers and functionality.