Automated Bot Traffic - Strategies to Handle and Manage It

- 11 minute read

-

In the enterprise realm, automation stands as a transformative force, offering a dual-edged sword of efficiency gains and emergent challenges. Its implementation is reshaping industry norms, potentially redefining the operational paradigms of the business world.

The advantages automation ushers in are multifaceted, with amplified productivity at the forefront. Nonetheless, it has also introduced complexities, such as the proliferation of bots. While these automated scripts can serve legitimate and advantageous functions, they also present a security concern. Malicious bots, in particular, pose a pronounced threat, engaging in activities ranging from Distributed Denial of Service (DDoS) attacks to unauthorized content scraping and data breaches.

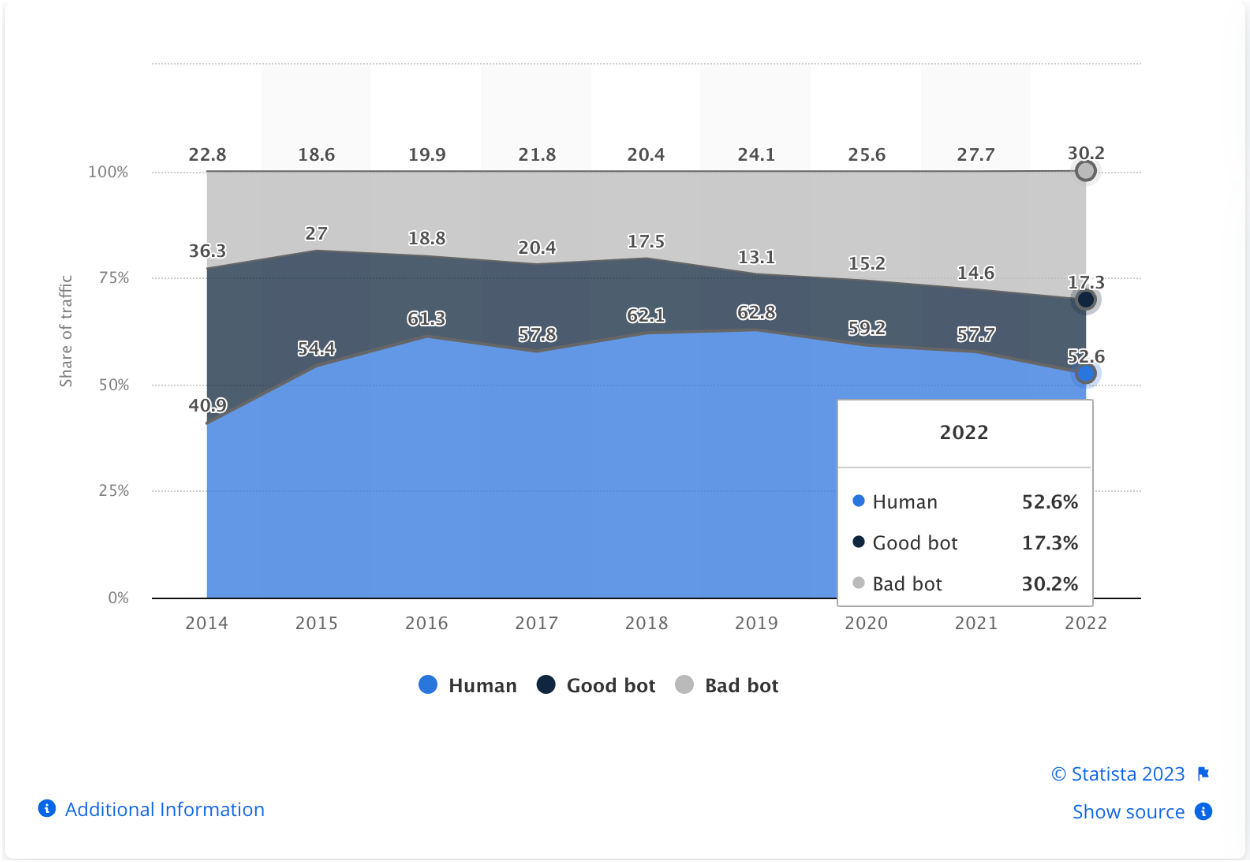

Recent research indicates that bots represent a significant portion of web traffic, comprising approximately 47% in 2022. Alarmingly, of this figure, bad bots constitute about 30%. For a corporate entity managing substantial online interactions, this translates to substantial exposure. For instance, if your enterprise's digital platforms register 100 million visits monthly, an estimated 50 million of those may be attributed to bot activity, with 30 million potentially being malevolent engagements. This underscores the necessity for robust cybersecurity measures and vigilant monitoring systems to safeguard enterprise assets and maintain the integrity of digital operations.

(Image Source: Statista)

What are the negative impacts of BOT traffic on business operations

- Downtime: While it’s normal to see only bad bots as the troublemaker, Good bots, such as those from reputable search engines like Yandex or Bing, can unintentionally contribute to website performance issues or downtime by engaging in aggressive crawling, especially during high-traffic events like Black Friday sales. Although these bots aim to index and update search engine databases for accurate information retrieval, their intensive crawling can strain server resources, leading to slower response times or server overload.

During peak traffic periods, the combined load from legitimate user requests and aggressive bot crawling can overwhelm the website infrastructure, causing degraded user experience, longer page load times, and, in extreme cases, website downtime. This is particularly detrimental for e-commerce sites heavily reliant on holiday season sales, where it can have a direct impact on the bottom line. - IT costs: Bot attacks compound IT woes by not only diminishing revenue but also inflating operational expenditures in various ways. These incidents can surge the cost of maintaining online services, including spikes in Content Delivery Network (CDN) fees. Due to the voluminous data transfers instigated by bot traffic, companies can experience up to a 70% hike in CDN-related expenses.

- Skews the analytics: Marketing faces substantial challenges due to the impact of bots on analytics data. These automated entities contribute to misleading statistics by generating artificial traffic, clicks, and interactions, ultimately distorting insights and influencing decision-making. An example of this is the use of web scraping bots, which extract data from websites and create deceptive impressions of user activity, thereby causing a distortion in marketing analytics.

- Slow server response & bad user experience: Numerous factors could contribute to a poor user experience linked to bot traffic, causing your server's response time to suffer. These include sluggish application logic, delayed database queries, slow routing, inefficient frameworks or libraries, and instances of resource CPU or memory starvation. Addressing all these elements is crucial to enhance your server's responsiveness and mitigate the negative impact on user experience.

- Log flooding: Log flooding often induced by bot activity, poses multifaceted challenges. It burdens servers and depletes resources, causing performance degradation and increased storage costs. The deluge makes it arduous for IT teams to monitor and analyze logs effectively, leading to delayed incident response and false alarms, contributing to alert fatigue.

How to identify the BOT traffic

- Server log analysis: There are multiple commercial & open-source tools that help to identify suspicious IP addresses, user agents, and request patterns. Some of the known solutions are - Fluentd (open-source), Logstash (open-source), Sumologic, Splunk, Loggly, New Relic etc. These tools can be configured to monitor patterns, setup alerts to keep a track of unusual traffic patterns and take appropriate actions to mitigate any negative impact of bot traffic.

- Utilize Analytics tools: Google Analytics, Heap analytics and similar tools can also help analyze traffic sources - referrers, unusual spikes to distinguish human traffic vs bot traffic. The following analytics anomalies are the hallmarks of bot traffic:

- Abnormally high pageviews

- Abnormally high bounce rate

- Surprisingly high or low session duration

- Junk conversions

- Spike in traffic from an unexpected location

- Guides like these can help you filter bot traffic from Google Analytics.

- Distinguish good vs bad bots: While it’s important to keep a track of bot traffic vs human traffic, it’s vital to understand that not all bot traffic is bad, Good bots like Google search, bing search, semrush, uptimerobot are good and known bots with specific purpose and blocking them can lead to negative impact on the business and operations.

Above mentioned tools can help identify the bots, however - Marketing, Web & IT teams should work together with a process to separate good ones from bad. Initially a weekly cadence and gradually reducing it to monthly would allow to get a handle on the bot traffic.

How to minimize the risk from BOT traffic

- Utilize a Web Application Firewall (WAF): Tailor your WAF settings to your business's geographical footprint. For instance, if your primary audience is in the US and you're experiencing bot traffic from countries like Russia, Ukraine, India, or China, it may be beneficial to block or restrict access from these regions. However, apply this strategy judiciously. Consider nuanced measures like blocking only POST requests from specific countries or targeting certain URLs and user agents. Advanced WAFs allow you to chain conditions for a more granular control over traffic.

- Rate Limiting: In scenarios where bot attacks exhibit intermittent patterns, characterized by elevated request volumes that fall short of constituting a full-fledged DDoS attack, conventional rate limiting may not be as effective. However, its significance becomes paramount when confronted with substantially increased request volumes. At such times, the implementation of rate limiting proves instrumental in thwarting potential threats to API services, offering a crucial line of defense against more pronounced and sustained attacks..

- Protect All Environments, Including Lower Ones: While it's common to secure production environments, don't overlook lower (development or staging) environments. Ideally, these should be inaccessible to external traffic or at least restricted to your corporate network. If using proxy domains or if password protection is feasible for these environments, ensure they're shielded from external traffic with the help of the Shield Drupal module.

- Application level protection - Enhancing application-level protection is essential for a robust defense against bots. While a Web Application Firewall (WAF) is effective, additional measures can be implemented at the application level. Specifically for Drupal, there are notable bot-fighting modules designed for this purpose. Among the highly recognized and recommended modules are Antibot, Honeypot & Captcha. Integrating these solutions into a Drupal application strengthens its resilience against a variety of bot attacks, offering an extra layer of security.

- Implement FingerprintJS’s BotD: BotD, an open-source library from FingerprintJS, operates in the browser and employs fingerprinting techniques to detect automated tools and advanced threats in real time. While there is a more sophisticated Pro version better suited for enterprise-level needs, the open-source variant is an excellent starting point for development teams to understand and combat bot threats.

- Adopt a Robust Caching Strategy: While caching isn't a direct defense against bot attacks, it's crucial in mitigating their impact. Implementing an effective caching strategy, using tools like Varnish and Edge/CDN caching, is vital. This ensures that pages, particularly those not requiring user sessions, are cached and served efficiently, maintaining site performance even under the additional load of bot traffic.

These approaches, when thoughtfully implemented, can significantly reduce the risk and impact of bot traffic in an enterprise setting.

Optimizing for Good Bots

- Create a robots.txt file to manage crawl budget: For enterprises, effectively managing good bot traffic is crucial, and one efficient way to do this is by creating and configuring a robots.txt file. This file acts as a guide for search engine bots and other compliant crawlers, enabling control over your site's crawl budget. By specifying which parts of the site should be crawled and which should be avoided, you can direct bots to prioritize high-value content important for your SEO strategy while conserving server resources by limiting access to less critical areas.

Additionally, the robots.txt file can be used to set crawl delays, helping to manage the frequency of bot visits and reduce server load, especially during peak traffic times or major marketing campaigns. This strategic approach ensures that your most important content is efficiently indexed, enhancing your online presence, while maintaining optimal website performance and resource utilization. - Crawl limiting using webmaster tools by these search engines: Implementing crawl limiting through webmaster tools (like Google search console, Bing Webmaster tools) provided by search engines is a valuable strategy for enterprises aiming to manage bot traffic effectively. Major search engines offer webmaster tools that allow website administrators to set preferences for how frequently their site is crawled. By leveraging these tools, enterprises can define crawl rate limits, ensuring that search engine bots do not overload their servers with excessive requests.

This not only helps in conserving server resources but also enables enterprises to maintain control over when and how search engines index their content. This strategic approach ensures a harmonious balance between the need for search engines to index relevant content and the necessity for enterprises to uphold optimal website performance, offering a tailored solution to suit the specific requirements of the business.